Data Conversion Framework for Dictionaries

Objectively, Dictionaries Conversion Framework is a lexical data conversion instrument that serves for the benefit of Oxford Global Languages initiative. In particular, DCF allows for fast and efficient data processing that results in creating online dictionaries for any of the known languages ever recorded in dictionaries. In practice, DCF is a sort of a concept, unconventional approach in solving the issues of data translation: costs and quality control.

The work on Dictionaries Conversion Framework started in September 2015, a couple of months after the Head of Data Processing Division at Digiteum and the guru of code analysis, Alex Marmuzevich, took up the challenge of optimizing the dictionary conversion processing set by Oxford University Press.

Big Challenge Overview

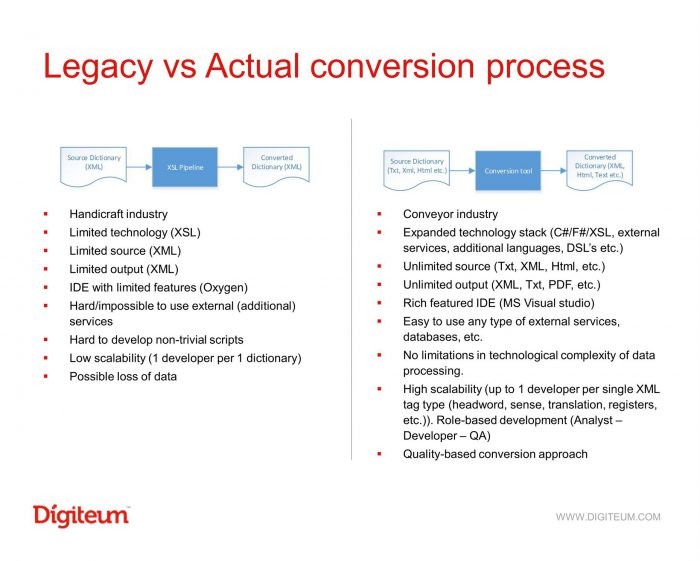

Hindi-English was the first dictionary that in a way pushed us to the idea of creating DCF. By the time Digiteum team took up the task, Oxford had already had the in-house dictionary conversion strategy that relied on a humanitarian approach, customized sets of operations for every language, and the efforts of narrowly focused specialists.

In a month, we effectively reached the goal and converted the dictionary. Along with that, we discovered the scale of the challenge, the imperfection of humanitarian approach to data conversion, and started to look for the options. Since we think IT, we realized that the way to optimal solutions paves through the IT approach. This is how we put together our IT-based team, launched the first QA on the operations, and kickstarted test driving development for dictionary conversion.

Issues to solve

- Diversity. It was one of our primary tasks – to create a unified convertor that would apply standard procedures to dictionary processing regardless of language and format.

- Exclusivity. We wanted to come up with the solution that would eliminate the need of exclusive knowledge and expertise for every single language and allow a small interchangeable team to work on any dictionary in any language effectively and at a quick rate.

- Quality. It was important to automate not only data conversion, but also dictionary verification and quality control processes so that to ensure zero-percent data loss at the output.

Unconventional Approach Based on Unique Expertise and Knowledge

We started to build the core of automated dictionary conversion with Urdu dictionary. At that time, we already knew that we needed to think outside the box to find the solution. Fortunately, it was predetermined by the circumstances we were in.

- First of all, we were too lazy to struggle with XSL and eager to invent something alternative and original to solve the challenge playfully and guarantee both quality and fun.

- We were not bounded by the approaches that computational linguistics specialists usually apply to solve similar tasks. Since we didn’t know the standards, we were free to create the ones of our own.

- It was almost a necessity to come up with something as unified and automated as the DCF that we have today. We didn’t want to diffuse operations and create a too complex system that would involve multiple linguists and language specialists.

Fortunately, by that time I have already accumulated a wide knowledge base and deep background in code analysis and the application of compiler theory in programming language data conversion. They appeared a striking match: the principles of code conversion fall on lexical data conversion needs perfectly. This is how we came up with the core of dictionaries conversion: we created the analog of a computer language compiler and applied it to lexical data.

Case Study: Document management system development for an agtech analytics company

DCF Outline

In short, DCF allows a team of 3 to take a dictionary in any language locked in almost any format, analyse and process dictionary content, and convert it into one of 3 target formats with a ready-to-use beta-version of the dictionary in 2-3 days after the starting point. The platform is developed in the way to guarantee quality control and eliminate data loss issue almost on 100%. Of course, this is the ideal scenario.

Check our web application development services to learn more.

Solving Challenges Step by Step

Language peculiarities

The operation of the Dictionaries Conversion Framework has not much to do with the peculiarities and specific features of the languages themselves. In other words, it doesn’t matter what language family the dictionary we convert belongs to, until we have a familiar dictionary structure in hand.

However, some language peculiarities leave a footprint in dictionary interpretation, rather than dictionary analysis.

For example, English dictionaries don’t take into consideration noun classification, while for Bantu languages (Kiswahili or Setswana) the classes of nouns are crucial and have impact on dictionary content. The same is relevant for word roots, that is of great importance for Hebrew and Arabic, but makes no difference to Japanese and Chinese.

At the same time, language peculiarities influence the workflow and the team, since we need to “read” languages at the initial analysis stage.

When the project was launched we had to deal with the dictionaries from non-European language family, such as Urdu and Hindi. To make it possible we engaged an expert in Middle East and Asian languages, Olga, and thus increased the team by adding a linguist. Today, we need the force of roughly 3 specialists for one dictionary: a linguist, a developer and a QA. Since the conversion can move as a conveyor-based workflow, this team allows converting one dictionary in 2-3 days with 4-6 dictionaries per week.

Quality control

In general, the quality of the dictionary we use as a source eventually determines the timeline of dictionary conversion. Since the process of conversion directly impacts dictionary entries, we would need to come up with a solution to verify the quality of conversion of these entries. Just think of it, these are millions of entries we are talking about.

Normally, the quality control process would take a team that divides the whole volume of lexical entries into batches, distributes them among its members, who then will verify and compile the results back together. Clearly, we were not happy with the process and came up with an alternative solution.

We determined that every dictionary consists of a number of more or less unique entries, that repeat themselves in structure across the dictionary. This pattern allowed us to compile a “compressed version” of every dictionary that would consist of the set of “chunks” that represent all unique entries. In general, the dictionary with 40,000 entries can be shrunk into the version with 200-400 chunks.

This “small” dictionary eventually would be used as a pilot version to verify all conversion operations on the dictionary. We assume that if the pilot version is correct, the complete set of entries in the dictionary is correct. This is how we shrink verification efforts by 100-200 times.

Read: 5 Tips for e-Learning platform development

Initial data quality

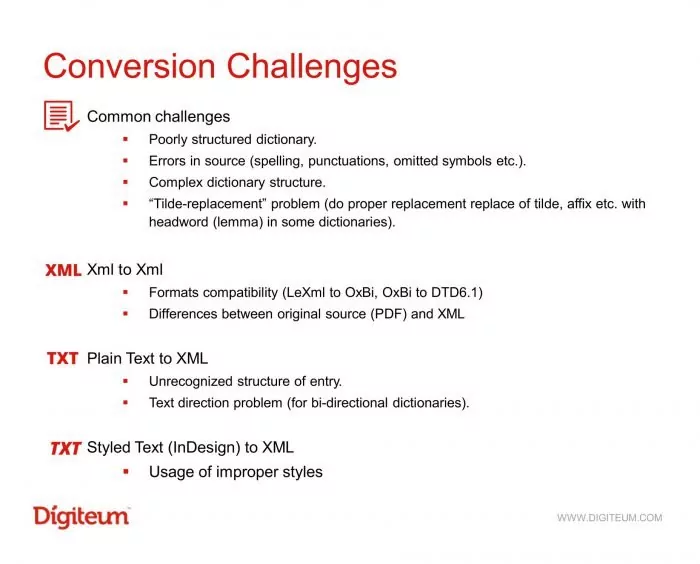

The problem appears when we get a messy dictionary from the very start. When I say messy, I mean that it can be either poorly structured or formatted, or the entries are given in a non-unified manner.

It should be noted that all the dictionaries, in general, are greatly formalized and have more or less similar structures in one class.

Take different dictionaries, for example, Hungarian, Romanian and Chinese. All of them have similar structures with a number of headwords and recognizable layouts. This is what makes them familiar to everyone.

But when the structure and the format of the dictionary are broken, we get a greater amount of unique entries and a bigger “compressed version.” Eventually, it complicates verification and slows down the process of conversion.

Unfortunately, the optimization of work on messy initial data doesn’t seem visible now, since the problems that arise with the input are diverse and rarely repeat themselves. Either it’s the layout, input format, or the faults and lapses in the original dictionary, the issues are always unique.

However, we do apply wits and tricks to solve each of the problems individually.

Once we started to work on Hindi Mono, we came across the challenge of compounds structures. Native speaker testing determined that around 22,000 of compounds required manual verification for the tilde-replacement problem. To solve this puzzle, we raised massive content volume in Hindi and combed the examples to determine the patterns of compound building and eliminate tilde factor. Thus, 22,000 variations that required manual check scaled down to 800 and one week of work. If not for this optimization solution, it might have taken 3-4 months for a linguist to go though all the entries.

That was a great example of strategic thinking, but this is only a single issue.

The Future of DCF

The best thing about the platform that we have developed is its scalability for linguistic-related projects, lexical data conversion, and possibly, data conversion in general. For now it’s hard to predict how this system can be applied to other projects. However, the expertise that Digiteum has nurtured along the way of DCF development, definitely, has multiple applications. We just need a relevant project to apply it.

Up to this point, Oxford Global Languages has just finished the first phase of its initiative with the launch of 30 dictionaries in 18 languages spread among 10 websites. Next comes the second phase with another 30 dictionaries to go. So there’s no way for us to cool off since both the DCF platform and Digiteum team have work to do.

Learn about other projects for Oxford Languages: forum development case study and corpus platform development.