It’s estimated that 2.5 billion GB of data is created daily.1 Thanks to the growing solutions for telemetry and automation, emerging connected environments, inflating social media content and traditional paper-based and digital information turnover, today’s data takes endless number of forms and objectives.

Cross-industry organizations and companies are trying to catch up with the growing volumes of data and gain visibility in it – to improve their products and services, address to IT infrastructure monitoring software development to monitor performance, identify market trends, ensure operational excellence, or even build the entire business around data insights.

Yet, data-focused enterprises can leverage only a fraction of their assets. The reasons vary, from excessive data fragmentation, inability to access relevant data to security concerns. However, the most common factor is the nature of data itself.

According to Gartner, more than 80% of data enterprises deal with is unstructured, another 10-15% is semi-structured.2 It applies to emails, social media feeds, PDFs, documents and any sort of non-text media – images, video and audio records.

Read: The impact of technology on the travel and tourism industry

In other words, companies have to work with the material 95% of which they don’t really know how to read, classify and set into a hierarchy. In fact, the majority of enterprises (71%) fail trying to manage and protect their unstructured data for a number of reasons, thus missing out on a significant amount of digital intelligence, and possibly, competitive advantage.3

Technologies, models, tools and specialists focused on working with big data are abundant. However, unstructured and semi-structured data remains a problematic topic often associated with a number of barriers.

The first problem is volume; 80% of all the data is a lot. Many companies don’t know how to handle the silos of multi-source data they could benefit from because they simply can’t handle it. Others don’t know about some of the data they create or have in the first place.

Secondly, there’s a problem of the source. When we work with real-time data coming from trackers and sensors (for example, when using GPS in agriculture), we can predict the upcoming stream and know exactly what data formats we are dealing with. In the case of semi-structured and unstructured data, we have to handle a variety of multi-format sources, datasets with different potential, and thus need a special tool able to sort this cacophony out.

Despite proven financial benefits of big data, cost remains one of the major reasons why companies give up on some of their data assets.

Often, processing diverse data requires manual effort of narrow specialists to exclude the possibility of mistakes. Especially, when the cost of a mistake is high, for example, in case of semi-structured medical records. Automating the processing of different data formats may also cost a pretty penny, since it would require integrating various tools and platforms. However, the cost also depends on the data sources. For example, most satellite imagery is publicly available, so satellite farming might not be as costly as other applications of big data.

Companies usually have data security in place when it comes to structured data, such as financial reports or patent details. It’s easy to protect something that falls into a column and row model because you know exactly where your sensitive data is and how much data you have to deal with.

Unstructured and semi-structured data may or may not contain sensitive and discreet information, such as personal and financial details, medical records, even the company’s confidential information fragments of which can end up in an email or a Slack message. Often, decision-makers don’t know what important information is hidden inside their datasets, and most importantly, how to identify this information and not to overlook a single bit.

It’s more complicated to derive meaning from heterogeneous data, and therefore to control it. One can use lexical analysis or NLP engines to go through millions of emails, but none of these methods would guarantee accuracy. In the case of sensitive and discreet information, even a single miss can cost a lot. To avoid losses, companies would need the tools able to understand unstructured and semi-structured data and extract certain information with high accuracy and quality at the output.

Case Study: How to develop data processing software for pharmaceuticals and lab testing professionals

Today, companies can leverage all the data they need, including internal and third-party assets. From the organization and management point of view, it would require building a data strategy, assigning data owners and an analytics team, taking focused security precautions and elaborating a data-driven initiative – a business case that would explain why one needs certain data insights and how to use them.

From the technology point of view, to leverage all available data, enterprises would need to get over the mentioned barriers and find the tools that would tackle the problems of data – volume, source fragmentation, cost-heavy operations and quality control.

As a result, the solution that meets all the requirements could consistently drive quality content from different types of data, enable enterprises with a reliable source of desired insights and potentially, differentiate a company in the market with the help of an invaluable asset – information.

Learn about our skills and expertise in IoT and big data solutions, mobile, web platform and custom website development.

Here are the working examples of the solutions that enable the efficient processing of unstructured and semi-structured lexical data.

Oxford University Press (OUP) is known as the creator of trusted human language technology tools. To build its products and services, OUP is dealing with enormous amount of lexical data, some of which is unstructured and semi-structured. Super Corpus Platform (SCP) and Dictionaries Conversion Framework (DCF) built by Digiteum in cooperation with and for OUP are the two clear examples of efficient solutions that deal with unstructured and semi-structured lexical data respectively.

DCF was developed as an innovative solution for dictionaries conversion that could outrun traditional methods in terms of time, efficiency and quality.

In a nutshell, the framework allows to process massive volume of semi-structured lexical data – dictionary entries – in almost any source format (e.g. XML, RTF, HTML, plain text, etc.) and convert it into a specified output format – LexML, DTD6 or basically any other format depending on the requirements.

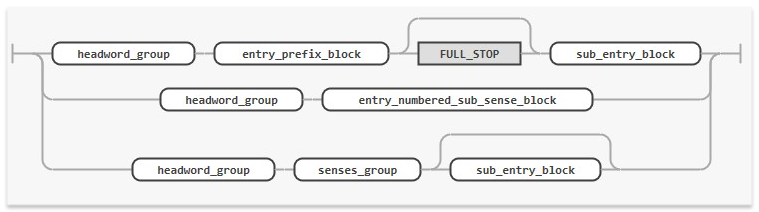

3 variants of a dictionary entry representation (partial)

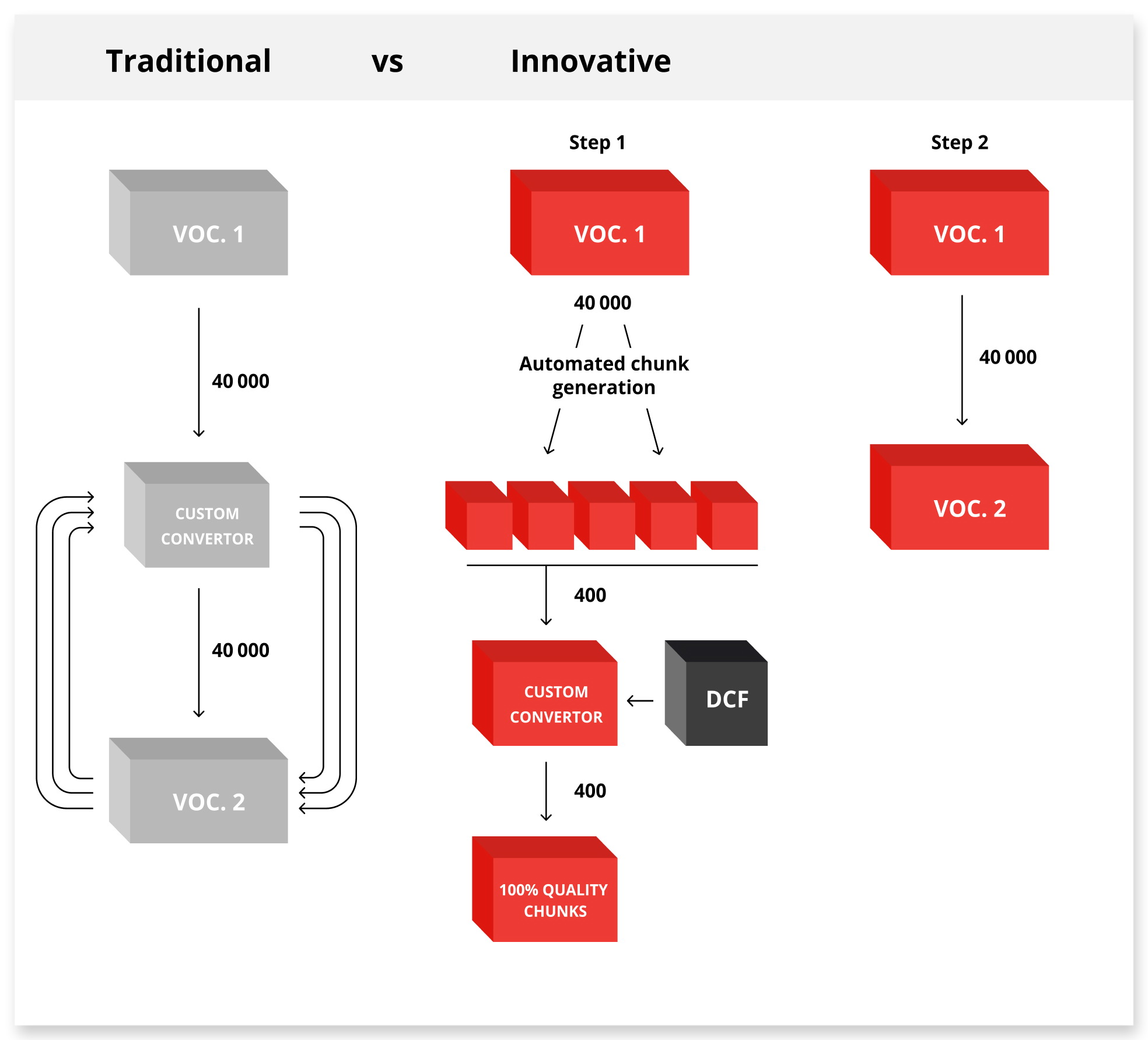

Traditionally, to converse a dictionary that consists of let’s say 40,000 entries, one needs to build a custom convertor, put every entry through this convertor, which by the way, is going more complex with new data coming in, and then perform multiple tests to check every single converted entry. This process takes weeks or months and requires serious manual work and the eyes of language specialists for testing.

Innovative dictionary conversion with DCF is optimized to two major steps. First, it takes a special tool to automatically identify and generate unique chunks – similarly structured dictionary entries – out of the whole dataset of 40,000 entries. Then, the resulting chunks (roughly 400) go through a custom convertor built on C#, Python, Perl or Java. Relying on DCF – a set of libraries and algorithms that determine conversion, – the convertor processes the chunks, enables immediate manual testing on the go (compare testing 400 chunks to 40k entries), identifies the rules that should be added to the conversion and results with the library of quality converted chunks at the output.

The second step is simpler. Considering the chunks are converted correctly and well-tested, all 40,000 entries that chunks represent go through the flow and converse automatically without additional testing or iterations.

Optimization of dictionaries conversion enables enhanced speed, performance and close to 100% data accuracy at the output due to strict testing at the very first step of the conversion flow.

Here’re some benefits of this framework for semi-structured lexical data processing:

1) reduced time and cost of lexical data conversion by at least 10 times compared to traditional personnel heavy methods;

2) close to 100% data accuracy at the output – the framework is ideal for extracting precise insights from raw semi-structured data with zero loss rate;

3) no need for large data analytics teams and the efforts of specialists thanks to automation and workflow scheme;

4) flexibility and scalability in terms of formats that allow driving output information to various easy-to-use formats and databases.

Systems similar to DCF act as a high-performance and cost-efficient solution to process semi-structured data and deliver insights with tested accuracy at the output. Such solutions are optimized to extract sensitive, discreet and important information that requires the lowest rate of loss.

Case Study: eLearning data management platform development for Oxford University Press

SCP is the other solution for processing large volumes of raw lexical data. In this case, it refers to diverse texts in English collected throughout the Internet to build English corpus.

The system harvests news articles in English collected by Event Registry throughout the day. Then, this raw data goes through various types of data processing: filtration, deduplication, enriching metadata with new attributes, linguistic annotation.

After, the system sends processed data to Azure Cosmos DB – document-oriented database service able to work with this amount of data and address system needs in terms of productivity and sustainability. Modular architecture of the solution, in turn, allows scalability – we can extract and add different data processing tools, change data sources and storage services according to our requirements.

Built on Microsoft Azure Cosmos DB, the system can handle tremendous amount of unstructured lexical data in short time and enable utmost productivity. The system can digest from 80 thousand to 8+ million raw documents per day. In human terms, it can process all news articles in English produced daily throughout the entire Internet in 3 hours.

Here’re some benefits of SCP that prove its efficiency when it comes to working with really big data:

1) Modular architecture and cloud-based technology behind the system enable unrivaled productivity and scalability – we can connect new data sources, databases, enhance functionality for data processing, for example, adding sentiment analysis.

2) The system is focused on processing enormous datasets in short periods shrinking the time, effort and, obviously, cost of working with unstructured data.

3) SCP easily handles overloads and can extend its processing capacity by as much as 10 times.

Even though SCP is a narrowly focused corpus software development case, the principles and solutions used in the system reveal its potential for other types of data and fields of application. Platforms similar to SCP are optimal for working with massively growing datasets from various sources with different output capabilities.

Ready to leverage your data? Check our big data software development services to see how we can help you overcome ALL the big data challenges and create an effective engine for business insights and intelligence.

Both, Dictionaries Conversion Framework and Super Corpus Platform demonstrate high performance in semi-structured and unstructured data processing, successfully solve the problems associated with these types of data and provide notable results, both in quality and quantity. Such tools:

– tackle the problem of multiple sources and enable efficient data processing across different input formats and output requirements;

– produce high quality data insights with optimum data accuracy and minimum data loss;

– deal with large amount of data in limited timeframes;

– can handle serious overloads and still guarantee high quality;

– don’t require inflated data analytics teams and can go without a large number of narrow specialists;

– enable reasonable automation and optimize cost;

– are scalable and flexible to new sources, integrations, functionality, fields of application.

Businesses across industries and verticals already invest into data-driven initiatives. Most of these efforts are focused on structured data, for it is easier to collect, understand and transform into readable insights.

Unstructured and semi-structured data, in turn, is getting more attention thanks to the volume and market opportunities in various fields. Relying on innovative big data solutions, decision-makers finally get the tools to decipher more complex datasets and derive value from bigger lumps of their data.

These are just some examples of how big data tools can enhance the efforts of decision-makers across different fields. In reality, there’s a huge potential hidden in different types of data for almost everyone.

Case Study: Power consumption monitoring system using IoT and data analytics